A Categorical Perspective On Covariance

For functions on a measure space (e.g. a real interval ) there is a well known scalar product

This scalar product is of fundamental importance to the study of function and operators on measure spaces (Function Analysis). For example, there is a rich theory of how to decomposes functions on an interval into orthogonal Fourier-components.

If is a probability measure, we can regard as random variables. In this setting the viewpoint changes quite a bit. We give new names: . We are no longer interested in , and also rarely consider the scalar product. Instead the focus lies on expectation

and the covariance

This note considers the relation between these the probabilistic notions and the functional point of view.

Constant Split

Let be a probability space. Consider the vector space of square integrable functions:

See e.g. wikipedia for the details. We have the integral linear operator

The inclusion of the constant functions leaves us with a sequence:

whose composition , since is a probability measure. We get an induced splitting of into constant functions plus functions with integral :

A function can be decomposed accordingly into its constant part and its integral-0 part: .

Bilinear Forms

The space comes with a scalar product

which is non-degenerate and complete and thus makes this space into a Hilbert Space.

The covariance product is defined as

This product is bilinear but degenerate. The radical of are precisely the (almost) constant functions .

In the light of the above decomposition, we see that is the restriction of to extended back to using the projection .

Conclusion

-

The space of integral-0 function together with the covariance product is a Hilbert Space.

-

The natural inclusion is isometric with adjoint linear operator .

-

There is an orthogonal direct sum decomposition:

Update 2015-05-17: Correlation as Cosine

The Pearson correlation is defined as:

It measures the linear dependece between two random variables. E.g. in the case of a discrete probability measure obtained from a sample, the correlation is the ratio between explained variance in a linear regression and total variance of the sample cf. wikipedia.

In analogy to the Euclidean plane, we define the cosine similarity between two functions by

Hence for centered functions we have

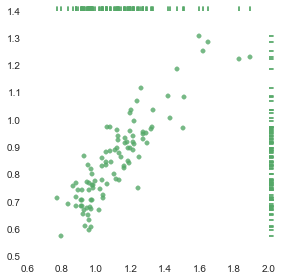

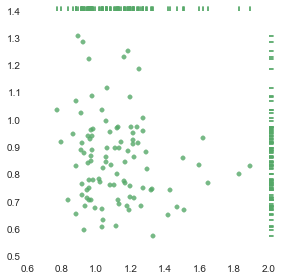

which gives a surprising relation between two different geometric interpretations of the same data:

- Regrssion line of coefficient pairs in

- Angle between vectors and in .

Note, that for cetnered functions () the regression line will always pass through the origin and the slope can be calculated to

However, at the moment I do not see how this helps to understand the above observation. My feeling is, that there should be a more conceptual reason for it. By interpreting as maps we can bring duality theory for vector spaces into play and maybe gain more insight form this perspective.